Install Freenas On Ssd Guide

This guide will install FreeNAS 10 (Corral) under VMware 6.5 ESXi, then via NFS share ZFS backed storage back to VMware. This is an update of my. “Hyperconverged” Design OverviewFreeNAS is installed as a Virtual Machine on the VMware Hypervisor. An LSI HBA in IT Mode is passed to FreeNAS via VT-d Passthrough. A ZFS pool is created on the disks attacked to the HBA. ZFS provides RAID-Z redundancy and an NFS dataset is then shared from FreeNAS and mounted from VMware which is used to provide storage for the remaining guests.

Optionally containers and VM guests can run directly on FreeNAS itself using bhyve. FreeNAS CorralFreeNAS 10 (now called FreeNAS Corral) is a major rewrite over FreeNAS 9.10, the GUI has been overhauled, it has a CLI interface, and an API. I think the best feature is the bhyve hypervisor and docker support. To some degree for a single all-in-one hypervisor+NAS server you may not even need VMware and be able to get away with bhyve and docker.Like anything new I advise caution against running it in a production environment.

I do see quite a few rough edges and a few missing features that are available in FreeNAS 9.10. I imagine we’ll see frequent updates with polishing and features added.

Install Freenas On Ssd Guide Download

A good rule of thumb is to wait until TrueNAS hardware is shipping with the “Corral” version. I think this is the best release of FreeNAS yet, and it is going to be a great platform moving forward! Get HardwareThis is based on my. For drives I used 4 x White Label NAS class HDDs (see ) and two Intel DC S3700s (similar models between S3500 and S3720 should be fine), which often show up for a decent price on Ebay. One SSD will be used to boot VMware and provide the initial data storage and the other used as a ZIL.You will need an HBA to pass storage to the FreeNAS guest. I suggest the ServerRAID IBM M1015 flashed to IT mode, or you can usually find the for a decent price on eBay. You will also need a.

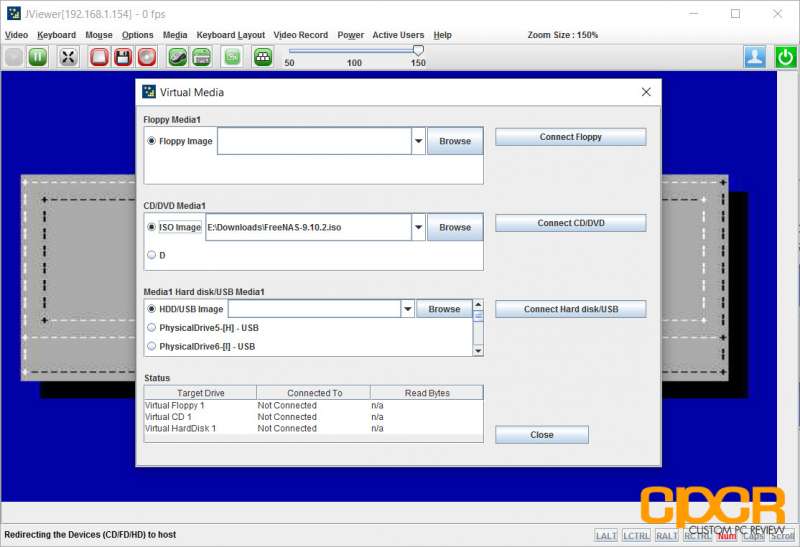

IPMI SetupGo ahead and plug in the network cables to the IPMI management port, as well as at least one of the normal ethernet ports.This should work with just about any server class Supermicro board. First download the (I just enter “Private” for the company). Once installed run “IPMIView20” from the Start Menu (you may need to run it as Administrator).Scan for IPMI Devices once it finds your Supermicro server select it and Save. Login to IPMI using ADMIN / ADMIN (you’ll want to change that obviously).KVM Console TabLoad the VMware ISO file to the Virtual DVD-ROM driveDownload the.Select ISO file, Open Image, select the VMware ISO file which you can download here, and then hit “Plug In”Power onHit Delete repeatedlyChange the boot order, I made the ATEN Virtual CD/DVD the primary boot devices, and my Intel SSD DC S3700 that I’ll install VMware to secondary, and disabled everything else.Save and Exit, and it should boot the VMware installer ISO. Install VMware ESXi 6.5.0Install to the Intel SSD Drive.Once installation is complete “Plug Out” the Virtual ISO file before rebooting.Once it comes up get the IP address (or set it if you want it to have a static IP which I highly recommend).4. PCI Passthrough HBAGo to that address in your browser (I suggest Chrome). Manage, Hardware, PCI Devices, select the LSI HBA card and Enable Passthrough.Reboot5.

Setup VMware Storage NetworkIn the examples below my LAN / VM Network is on 10.2.0.0/16 (255.255.0.0) and my Storage network is on 10.55.0.0/16. You may need to adjust for your network. My storage network is on VLAN 55.I like to keep my Storage Network separate from my LAN / VM Network. So we’ll create a VM Storage Network portgroup with a VLAN ID of 55.Networking, Port groups, Add Port GroupAdd VM Storage Network with VLAN ID of 55.(you can choose a different VLAN ID, my storage network is 10.55.0.0/16 so I use “55” to match the network so that I don’t have to remember what VLAN goes to what network, but it doesn’t have to match).Add a second port group just like it called Storage Network with the same VLAN ID (55).Add VMKernel NICAttach it to the Storage Network and give it an address of 10.55.0.4 with a netmask of 255.255.0.0You should end up with this6. Create a FreeNAS Corral VMFreeBSD (64-bit)Install it to the DC S3700 Datastore that VMware is installed on.Add PCI Device and Select your LSI Card.Add a second NIC for the VM Storage Network. You should have two NICS for FreeNAS, a VM Network and a VM Storage Network and you should set the Adapter Type to VMXNET 3 on both.I usually give my FreeNAS VM 2 cores, if doing anything heavy (especially if you’ll be running docker images or bhyve under it you may want to increase that count).

One rule with VMware is do not give VMs more cores than they need. I usually give each VM one core and only consider more if that particular VM needs more resources. This will reduce the risk of CPU co-stops from occurring. Gabrie van zanten’s is a good read.ZFS needs memory. FreeNAS 10 needs 8GB memory minimum. Lock it.Made the Hard Disk VMDK 16GB.

There’s an issue with the VMware 6.5 SCSI controller on FreeBSD/FreeNAS. You’ll know it if you see an error like:UNMAP failed. Disabling BIODELETEUNMAP CDB: 42 00 00 00 00 00 00 00 18 000.CAM status: SCSI Status Error.SCSI status: Check Condition.SCSI sense: ILLEGAL REQUEST asc:26,0 (Invalid field in parameter list).Command byte 0 is invalid.Error 22, Unretryable error.To prevent this, change the Virtual Device Node on the hard drive to SATA controller 0, and SCSI Controller 0 should be LSI Logic SASAdd CD/DVD Drive, under CD/DVD Media hit Browse to upload and select the FreeNAS Corral ISO file which you can.7. Install FreeNAS VMPower on the VMSelect the VMware disk to install to. I should note that if you create two VMDKs you can select them both at this screen and it will create a ZFS boot mirror, if you have an extra hard drive you can create another VMware data store there and put the 2nd vmdk there. This would provide some extra redundancy for the FreeNAS boot pool.

In my case I know the DC S3700s are extremely reliable, and if I lost the FreeNAS OS I could just re-import the pool or failover to my secondary FreeNAS server.Boot via BIOS.Once FreeNAS is installed reboot and you should get the IP from DHCP on the console (once again I suggest setting this to a static IP).If you hit that IP with a browser you should have a login screen!8. Update and RebootBefore doing anything. System, Updates, Update and Reboot.(Note: to get better insight into a task progress head over to the Console and type: task show). Setup SSL CertificateFirst, set your hostname, and also create a DNS entry pointing at the FreeNAS IP.Create Internal CAExport CertificateUntar the file and click the HobbitonCA.crt to install it, install it to the trusted Root Certificate Authorities. I should note that if someone were to compromise your CA or gain the key they could do a MITM attack on you forging SSL certificates for other sites.Create a Certificate for FreeNASListen on HTTP+HTTPS and select the Certificate.

I also increase the token Lifetime since I religiously lock my workstation when I’m away.And now SSL is Secured10. Create PoolDo you want Performance, Capacity, or Redundancy? Drag the white circle thing where you want on the triangle and FreeNAS will suggest a zpool layout. With 4 disks I chose “Optimal” and it suggested RAID-Z which is what I wanted.

Be sure to add the other SSD as a SLOG / ZIL / LOG.11. Create UsersIt’s probably best not to be logging in as root all the time. Create some named users with Administrator access.12. Create Top Level DatasetI like to create a top level dataset with a unique name for each FreeNAS server, that way it’s easier to replicate datasets to my other FreeNAS servers and perform recursive tasks (such as snapshots, or replication) on that top level dataset without having to micromanage them.

I know you can sometimes do recursive tasks on the entire pool, but oftentimes I want to exclude certain datasets from those tasks (such as if those datasets are being replicated from another server).If you’d like to see more on my reasoning for using a top level dataset see myStorage, tank3, Datasets, New 13. Setup SambaServices, Sharing, SMB, set the NetBIOS name and Workgroup and Enable.Storage, SMB3, Share, to create a new dataset with a Samba Share. Be sure to set the ownership to a user.14. Setup NFS Share for VMwareI believe at this time VMware and FreeNAS don’t work together on NFSv4, so best to stick to NFSv3 for now.Mount NFS Store in VMware by going to Storage, Datastores, new datastore, Mount NFS datastore.15.

SnapshotsI setup automatic recursive snapshots on the top level dataset. I like to do pruning snapshots like this:every 5 minutes - keep for 2 hoursevery hour - keep for keep for 2 daysevery day - keep for 1 weekevery week - keep for 4 weeksevery 4 weeks - keep for 12 weeksAnd SAMBA has Previous Versions integration with ZFS Snapshots, this is great for letting users restore their own files.16.

ZFS Replication to Backup ServerBefore putting anything into production setup automatic backups. Preferably one onsite and one offsite.Peering, New FreeNAS, and enter the details for your secondary FreeNAS server.Now you’ll see why I created a top level dataset under the pool.Storage, Tank3, Replications, New, select the stor2.b3n.org Peer, source dataset is your top level dataset, tank3/ds4, and target dataset is tank4/ds4 on the backup FreeNAS server.Compression should be FAST over a LAN or BEST over a low WAN.Go to another menu option and then back to Storage, tank3, Replications, replicationds4, and Start the replication and check back in a couple hours to make sure it’s working.

My first replication attempt hung, so I canceled the task and started it again. I also found that adjusting the peer interval from 1 minute to 5 seconds under Peering may have helped.16.1 Offsite BackupsIt’s also a good idea to have Offsite backups, you could use S3, or a CrashPlan Docker Container, etc. Setup NotificationsYou want to be notified when something fails. FreeNAS can be configured to send an email or sent out Pushbullet notifications. Here’s how to setup Pushbullet.Create or Login to your Pushbullet account. Settings, Account, Create an Access TokenServices, Alerts & Reporting, Add the access key (bottom right) and configure the alerts to send out via Pushbullet.You can use the Pushbullet Chrome extension or Android/iOS apps to receive alerts.

Bhyve VMs and Docker Containers under FreeNAS under VMwareAdd another Port Group on your VM Network which allows Promiscuous mode, MAC address changes, and Forged transmits. You can connect FreeNAS and any VMs you really trust to this port group.Power down and edit the FreeNAS VM. Change the VM Network to VM Network PromiscuousEnable Nested Virtualization, under CPU, H ardware virtualization, x Expose hardware assisted virtualization to the guest OS.After booting back up you should be able to create VMs and Docker Containers in FreeNAS under VMware. And more.Use at your own risk.More topics may come later if I ever get around to it. Hi Ben,I tried virtualing Corral on ESXi 6.5, very similar to your steps above. I got it working, except that when compared to baremetal where I get 650MBps/650MBps read/write over a 10GBit network, I’m only getting about 250/150 when I virtualize Corral.

Did you notice any drastic performance hits in the VM environment?I’m using a Mellanox Connectx-2 NIC on the ESXi host, the machine is definitely not CPU or RAM limited, and i’m using the E1000 NIC driver (i’ve also tried the VMX3 driver)thanks! I have not had a chance to do any performance testing, I don’t have any 10Gbe networking connection so I’m typically maxing out around 90Mbps.

I have not tested VM to VM within ESXi yet.I actually want to experiment with eliminating VMware from my stack and just use FreeNAS+bhyve and docker. Having FreeNAS Corral run in Vmware let’s me use both in the interim (for all my existing VMs until I can move everything over to FreeNAS). I think the main hurdle I need to cross is how to pass an optical Blu-Ray/DVD/CD device to a bHyve guest or Docker container for my Automatic Ripping Machine. Maybe stupid questioni am still learning sorry but if i use that NFS share from FreeNAS VM and connect in a Windows VM or a Linux VM (all on the same ESXi host!) will it loopback all data?Is this going on the virtual network or will it actually try to use the NIC? Reason why i am asking is because i want to run an itunes server in OSX or WIndows but i need to connect it to a FreeNAS share. So there will eb at least 3 VM’s in the ESXi host, one windows one OSX and one FreeNAS. The library is allready on my FreeNAS storage (which is now stand alone but i will set it up in ESXi) and i have to run it from either OSX or Windows.When i use this guide will all data be looped back?

I’m not going to use it for datastorage in ESXi for now i think. So maybe NFS is not needed. But i nwould like to know if it is using the whole network in-out of the nic or will the traffic stay inside ESXi? First off, GREAT guide.I used the last one you had for ESXi 6.0 and FreeNas 9.10 too.

I having a bit of an issue with “bursty” traffic doing file transfers from SAMBA shares. I have FreeNas virtualized just like you do with the exception of passing two physical 1GB Intel NICs for NFS traffic and utilizing 1 Vnet 3 nic for SAMBA. When I start a file transfer, across the Vnet 3 NIC, the connection is maxed out for a short period and then drops to super slow speeds for the duration with intermediate bursts of speed.

My FreeNas VM has 2 vCPU and 8GBs of RAM assigned to it. Any idea what could be causing this? I experience the bursting on both reads and writes with large files. I would understand the behavior if it were numerous small files, but I am at a loss with the large files.

I don’t have ZIL/SLOG currently configured, but I have a spare 120GB SSD laying around. I wasn’t sure which option would give me the best performance, or if there was a way split the SSD in half for the ZIL and the SLOG. Also, have you tried using iSCSI for you VMware storage?

Just wanted to see if you have experienced better performance vs NFS with your VM’s.RegardsMG. When you said use half for ZIL and half for SLOG I think I need to clear up some misconceptions.

ZIL (ZFS intent log) is what ZFS uses to cache writes. ZFS always has a ZIL (except when it’s disabled) to cache writes, if you don’t have an SSD for a SLOG (separate log) device ZFS will cache writes/changes to your main storage pool which is probably slow spindle disks. Really slow because first ZFS will write to the ZIL, then write it to the pool. So if you don’t have a SLOG your ZIL is on the pool and you are writing twice on the same disks for every write. Basically what I’m saying is you only need one SSD for SLOG for the ZIL, I think FreeNAS Corral calls it a “LOG” device when you setup your pool.So, ZFS performs so badly without an SSD SLOG I wouldn’t even try to run it that way but you can disable the ZIL (zfs set sync=disabled tank/dataset) and then your writes in memory won’t get written to the ZIL.

I don’t recommend running this way in production as a power loss will result in data loss but you can at least disable the ZIL temporarily to see if that’s your bottleneck.For iSCSI I have seen numerous environments where FreeNAS has issues ever since they switched over to the kernel iSCSI target in 9.3. (see comments on ). I also think you lose a lot of the advantages of ZFS when you use it as block storage instead of making it aware of the filesystem. So I don’t use iSCSI and prefer SMB / NFS. But when I need to use iSCSI I use OminOS+NappIT as COMSTAR is very stable.

That said I have not tested iSCSI on Corral so it might be more stable now.I do believe VMware doesn’t honor guest sync writes over iSCSI so it will perform faster for you since you don’t have a SLOG. But you would also lose data if you lost power. On NFS VMware forces a sync (whether the guest requested it or not) so you really really need to have a SLOG in that situation.

Wow, looks like FreeNAS Corral is dead already. It’s pretty rare to see a “stable” release get abandoned by a software company. Sounds like the FreeNAS Corral leaders were pushing hard for a release before it was ready. Per several developers at FreeNAS were afraid to voice their concerns about Corral until the Corral leadership team left.Glad I held back upgrading my main system as per my policy of sticking with the same FreeNAS version as is shipping with TrueNAS. I expected a lot of bugs to be worked out with Corral–but I didn’t expect it to get pulled. While this may hurt their reputation, FreeNAS should be commended for now being transparent about what’s going on and fortunately early enough that the majority of people wouldn’t have upgraded production systems.

Thanks for the guide, it was super helpful. I’m running FreeNAS 9.10 on ESXi 6.5 so used pieces of this guide and the prior version and got it working no problem. I am curious – what do you do to back up your datastore/FreeNAS VM?The best I can come up with is to back up to the NFS share and, if the FreeNAS SSD were to fail, accept the downtime to replace the SSD, reinstall FreeNAS, and restore VMs from NFS backup.

It’s not optimal but it’s about the only option I can think of short of getting a second RAID controller and have 2 SSDs in RAID 1 for the datastore. I manually backup the FreeNAS configuration from time to time, usually just after I make a change. You don’t need a RAID card to get RAID protection on FreeNAS.

Install Freenas On Ssd Guide 2017

You can create boot VMDKs for FreeNAS on two separate drives, if you install FreeNAS to two separate drives (select them both at the installer screen) it will setup a ZFS mirror. The VM config for FreeNAS will still be on a single drive, but if you lost that drive you can re-create the VM but instead of adding a hard drive import the remaining vmdk in a matter of minutes.

How To Install Freenas

Basic Requirements. Boot Device: 8 GiB is the absolute minimum. 16 GiB is recommended. 64-bit hardware is required for current FreeNAS releases. Intel processors are strongly recommended. 8 GB of RAM is required, with more recommended.

FreeNAS 9.2.1.9 was the last release that supported 32-bit hardware and UFS filesystems. SSDs, SATADOMs, or USB sticks can be used for boot devices.

SSDs are recommended. 8 GB of RAM is the absolute minimum requirement. 1 GB per terabyte of storage is a standard starting point for calculating additional RAM needs, although actual needs vary. ECC RAM is strongly recommended. Directly-connected storage disks are necessary for FreeNAS to provide fault tolerance.

Hardware RAID cards are not recommended because they prevent this direct access and reduce reliability. For best results, see for supported HBA disk controllers. LSI/Avago/Broadcom HBAs are the best choice with FreeNAS. NAS-specific hard drives like WD Red are recommended. Intel or Chelsio 1 GbE or 10 GbE Ethernet cards are recommended.

Business-Class SystemsWhen using FreeNAS in a business setting, hardware requirements are defined by capacity, performance, reliability, and support needs.